Supports Multiple Language Subtitles with Large-scale Language Models Video subtitle generation platform to be launched on November 3

~Toward Universal Access to Video Content

INISOFT Co.,Ltd.

INISOFT Co. (Room 401, VORT AOYAMA, 2-7-14 Shibuya, Shibuya-ku, Tokyo; President: Jaewung Lee; hereinafter referred to as INISOFT) has developed a multi-language subtitle generation function for video (VoD) using Large Language Models (LLM) called INI AI Subtitle agent for VoD. A multi-language subtitle generator for video (VoD) utilizing Large Language Models (LLM) has been developed and will be available from November 3, 2025.

INISOFT

Background of "INI AI Subtitle agent for VoD" development

INISOFT provides DRM (Digital Rights Management) for short videos and video content on its live streaming platform "INI Live Streaming Platform". In collaboration with a Korean entertainment company, we have been distributing the performances of Korea's leading K-POP artists to fans around the world in cooperation with "Beyond LIVE (https://beyondlive.com/ )", a platform dedicated to online concerts.

In terms of live streaming, INISOFT plans to start providing multilingual subtitles to K-pop fans around the world using INI AI Subtitle, which generates and distributes multilingual subtitles in real time from live audio, and which was announced last year. In addition, the live streaming function of the fan platform, where artists and fans can interact in real-time, will also utilize INISOFT's AI Subtitle to generate and provide subtitles in real-time, allowing fans around the world to communicate instantly beyond language barriers. This will enable fans around the world to communicate with each other instantly, regardless of language barriers.

In order to expand the multilingual subtitling service, INISOFT has launched the "INI AI Subtitle agent for VoD," which combines the know-how cultivated through AI subtitle generation for live performances with new UI/UX functions that take video operations into consideration. We have developed and officially released "INI AI Subtitle agent for VoD", which implements new UI/UX functions in consideration of operation in videos, and the know-how we have cultivated in AI subtitle generation for live performances.

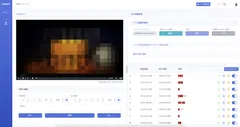

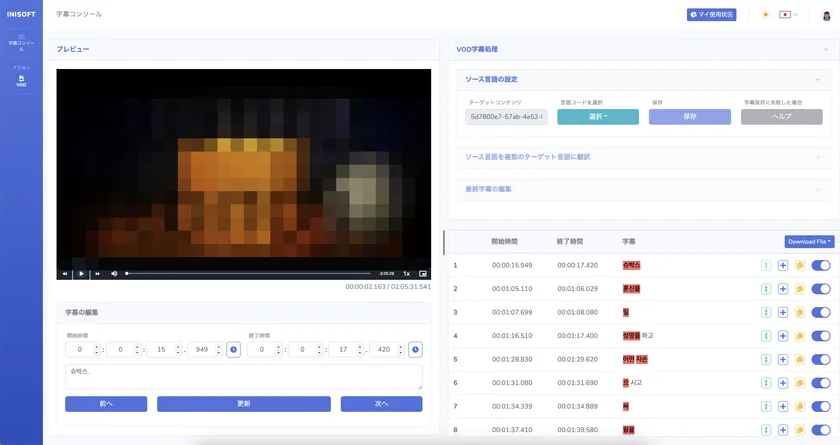

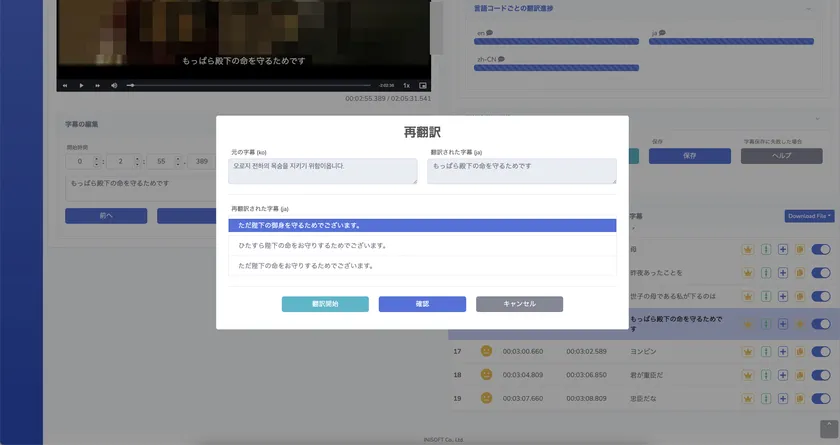

Subtitle editing screen

Translation edit screen with a large-scale language model

(*) Due to rights reasons, the video footage has been processed, but when used, the input and delivery video available for editing will be played back.

Features of "INI AI Subtitle agent for VoD

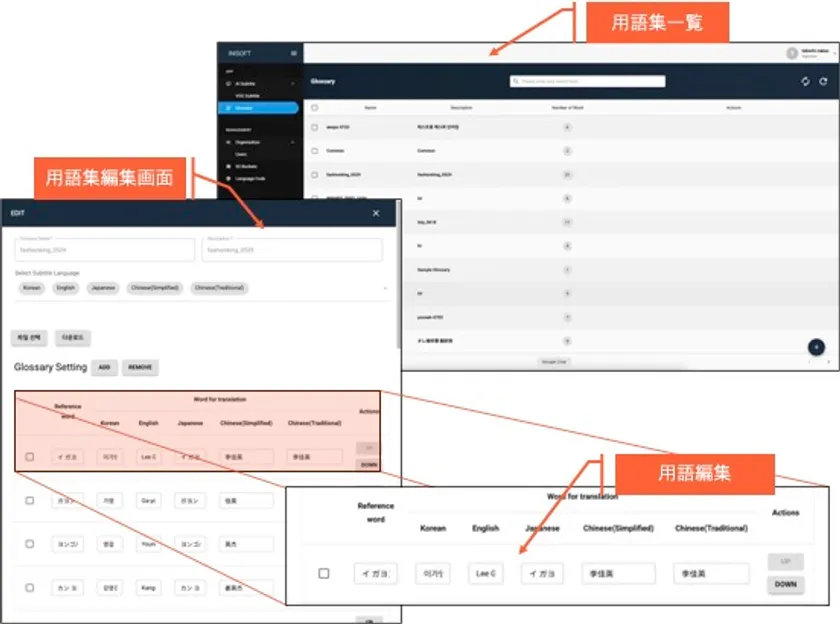

Language and glossary settings

Multiple languages can be set for the original language used in the video and for subtitles to be prepared at the time of delivery. The following 19 languages are currently supported.

● Japanese

● Korean

● English

● Simplified Chinese

● Traditional Chinese

● Indonesian

● German

● Spanish

● French

● Italian

● Polish

● Portuguese

● Vietnamese

● Turkish

● Russian

● Arabic

● Hindi

● Thai

● Filipino

For words that may not be correctly converted from speech, such as names of people, a word dictionary (glossary) for each event can be created and registered to prevent misrecognition and mistranslation by the AI.

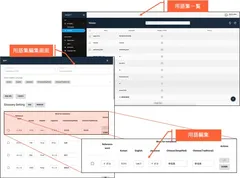

Glossary screen

2. Select SPEECH to TEXT

Various models used for transcribing audio from video (SPEECH to TEXT) have strengths and weaknesses, depending on the language and speech method. This system supports multiple STT models and allows the user to select the model to be used for transcription, enabling the use of the model with the best recognition rate for the video. The following models are currently available. (Subject to change based on future development)

● Azure

● OpenAI

● Amazon

● daglo

● Tencent

● Whisper

● BytePlus

If subtitle data corresponding to the language of the video already exists, it is possible to skip the transcription process and proceed to multilingual subtitle generation by importing the subtitle data.

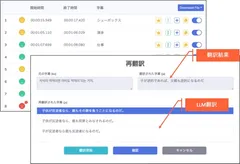

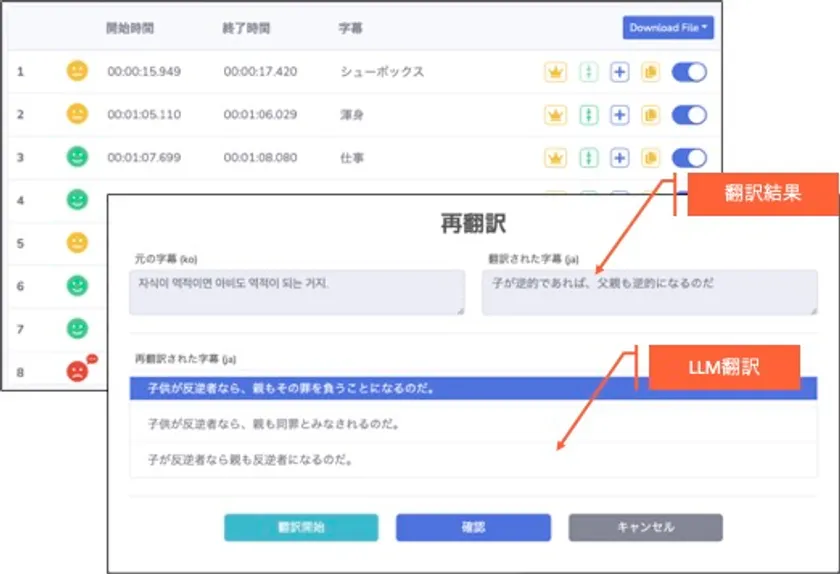

3. Translation by Large Language Models

To meet the diverse needs of various video types and users, this system utilizes both machine translation and Large Language Models (LLM) translation for video transcription results (SPEECH to TEXT: STT), allowing users to select the preferred outcome. The user can decide which result to use. The user can decide which result to use. Machine translation is used as the initial translation of the transcribed results.

● Machine translation: For news, sports broadcasts, etc., where a grammatical and reproducible translation is required.

● LLM translation: When a more natural translation is required that follows the context and historical background, such as in dramas and movies.

3. Large Language Models (LLM) translation

4. Editing of subtitles

The results of transcription and translation can be edited in various ways on the subtitle editing screen.

Merging: Subtitles divided into two sentences can be merged into one, and the merged result can be restored.

Add and copy: For user convenience, a single sentence can be easily split by adding subtitle data or copying existing subtitles, for example, when you want to display content as subtitles that do not exist in the audio.

Deletion: If you do not want to display a false positive or negative subtitle for production reasons, you can exclude the relevant subtitle from being displayed.

Time adjustment: The time code of the subtitle can be easily set by adjusting the video displayed on the editing screen to the position where you want the subtitle to be displayed.

5. Adding users dedicated to translation work and restricting access

When multiple people are in charge of translation work or when the work is outsourced, it is necessary to restrict the range of data access in the system. This system allows the administrator to freely create accounts for editors and to set access permissions for each content in each editing account.

6. Storage Management

Videos to be transcribed and translated by the system are uploaded to cloud storage that is recognized by the system, and users can register and use their own storage in addition to the storage provided by the system. Currently, only AWS S3 storage is available.

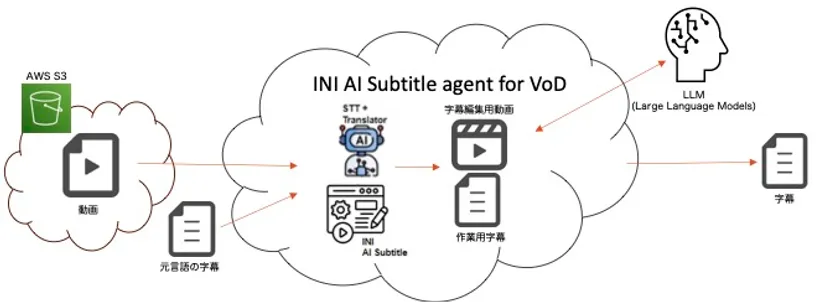

System configuration of "INI AI Subtitle agent

System configuration of INI AI Subtitle agent

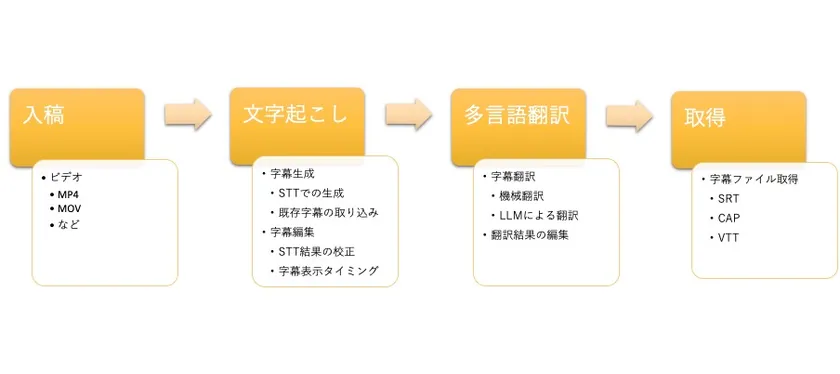

Processing sequence of "INI AI Subtitle agent

Exhibit at Inter BEE 2025

INISOFT will exhibit at Inter BEE 2025 (Media Solution Section). We will be demonstrating the "INI Live Streaming Platform" that supports the "INI AI Subtitle agent for VoD" introduced in this presentation, so please come and visit us.

Date: Wednesday, November 19 - Friday, November 21, 2025

Location: Makuhari Messe (Booth: 8305 in Hall 8)

URL : https://www.inter-bee.com/ja/

Inter BEE 2025

Company Profile

<Head Office

Company name: INISOFT Co.

Representative: Jaewung Lee, President and Representative Director

Location: A-519, BundangSuji U-Tower, 767 Shinsu-ro, Suji-gu, Yongin-si, Gyeonggi-do 16827 Korea

Gyeonggi-do 16827 Korea

Establishment : 2001

URL : https://www.inisoft.tv/

<Japan Branch

Company Name: iNisoft Japan Branch

Location: Room 401, VORT AOYAMA, 2-7-14 Shibuya, Shibuya-ku, Tokyo 150-0002, Japan

Company names and product/service names mentioned in this press release are registered trademarks or trademarks of the respective companies. The images are under development. They may differ from actual use.

- Category:

- Services